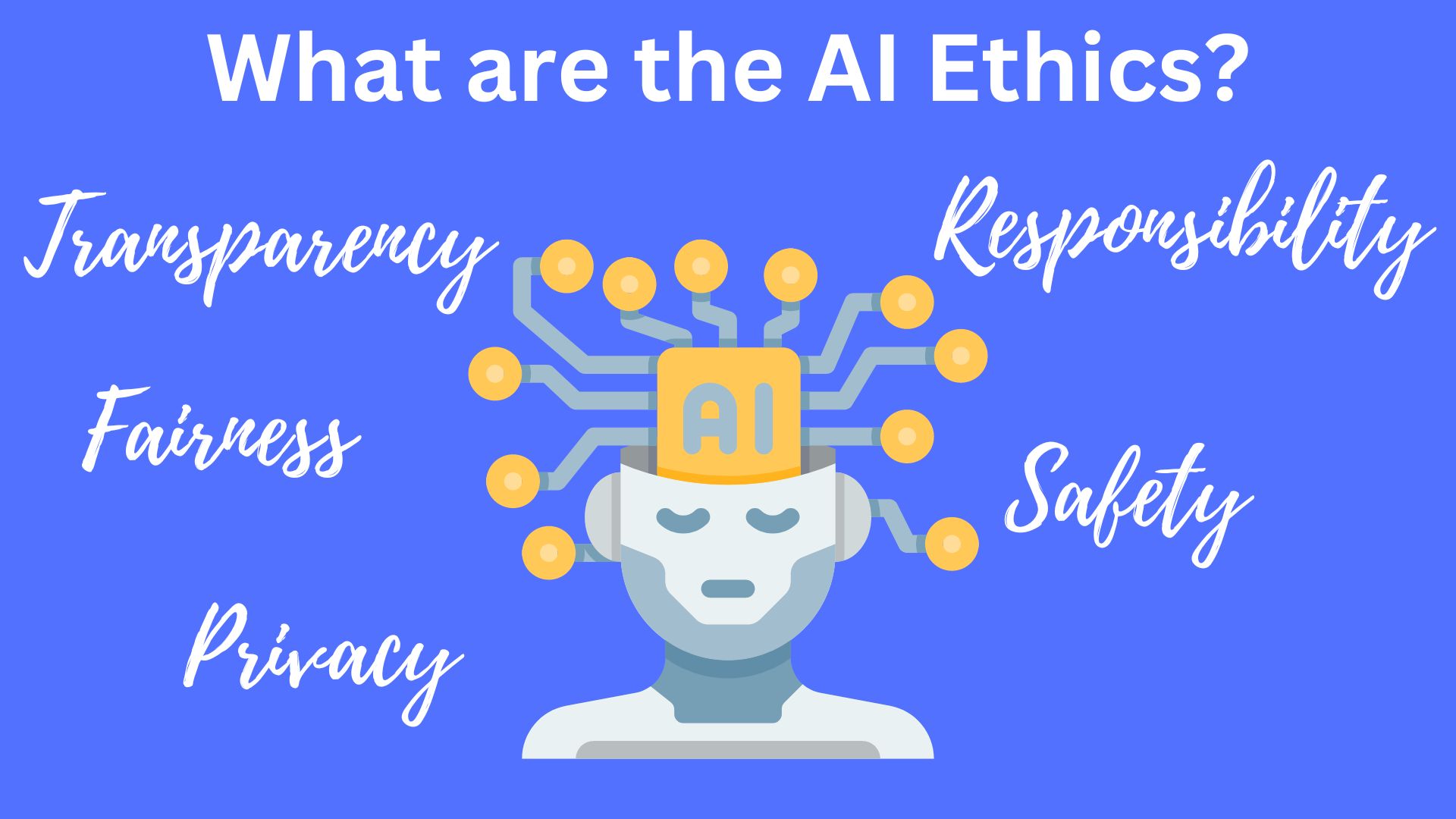

What are the AI Ethics?

Read Time:54 Second

AI ethics refers to the principles and guidelines that govern the development, deployment, and use of artificial intelligence systems. It encompasses a wide range of issues related to the impact of AI on society, including privacy, accountability, transparency, fairness, and bias.

Some key principles of AI ethics include:

- Transparency: AI systems should be transparent and explainable, so that people can understand how they work and why they make certain decisions.

- Fairness: AI systems should be designed to avoid bias and discrimination, and to treat all individuals equally.

- Privacy: AI systems should respect individuals’ privacy and protect their personal data.

- Responsibility: AI systems should be designed with built-in safeguards and accountability mechanisms to ensure that they are used responsibly and ethically.

- Safety: AI systems should be designed to be safe and to avoid unintended harm.

AI ethics is a rapidly evolving field, with new issues and challenges arising as AI technology continues to advance.

Therefore, it is important for researchers, developers, and policymakers to consider the ethical implications of AI and to work towards creating responsible and beneficial AI systems.